TL;DR:

This guide breaks down the two main options for deploying DeepSeek R1 on Google Cloud Platform: Compute Engine (DIY with full-stack control) and Vertex AI (a fully managed, scalable solution).

In the first post of our series, we explored how open-source LLMs like DeepSeek R1 and Llama 2 are reshaping AI by offering flexibility, transparency, and cost efficiency. Now, it’s time to get practical. If you’ve decided to deploy DeepSeek R1 on Google Cloud Platform, there are two main paths you can take:

- Compute Engine: A DIY, customizable approach with full-stack control.

- Vertex AI: A fully managed solution designed for simplicity and enterprise-grade integration.

Each has its advantages, depending on your needs. Let’s break down both options, so you can choose the right deployment path for your business.

Approach 1: Deploying DeepSeek R1 on Compute Engine (DIY Power

If you prefer full control over your infrastructure, Compute Engine is your go-to. This approach is ideal for organizations that need custom configurations, strict compliance, or deployment in air-gapped (offline) environments. Here’s how to get started:

Step-by-Step Deployment on Compute Engine

- Create a Compute Engine instance with a GPU (such as NVIDIA A100 or V100) to handle model training or inference.

- Access the configuration guide here: Compute Engine GPU Setup.

- Configuration will depend on which version of Deepseek R1 you select.

- Select a custom machine type if your workload needs specific CPU, memory, or GPU configurations.

- Configure secure networking using Virtual Private Cloud (VPC) Service Controls to limit data access and exposure.

- Install Docker and set up the DeepSeek R1 container.

- Pull the pre-built Docker image from your container registry or build your own.

- Use a “curl” or custom script to call Deepseek R1 from other servers, local machines, or other applications.

- Optimize performance by enabling sustained-use discounts for long-running workloads.

Use Cases for Compute Engine

- Research & Development: Perfect for experiments that require high flexibility and frequent hardware tweaks.

- Air-Gapped Environments: Useful for industries that require on-premise control or fully isolated cloud networks.

- Custom Workflows: Organizations with proprietary data pipelines and unique security needs often benefit from this approach.

GCP Advantage:

With Compute Engine, you can leverage custom machine types and sole-tenant nodes. (Sole-tenant nodes help ensure that your data stays isolated, which is essential for compliance with data residency or regulatory requirements).

Learn more here: Sole-Tenant Nodes Documentation.

Approach 2: Deploying DeepSeek R1 on Vertex AI (Managed Simplicity)

If you’re looking for speed and simplicity, Vertex AI offers a fully managed solution. Vertex AI handles much of the heavy lifting, such as infrastructure scaling, endpoint management, and MLOps integration.

Step-by-Step Deployment on Vertex AI

- Prepare a pre-built container with DeepSeek R1 or use a custom container image.

- Vertex AI supports easy container uploads and automatic deployment. More info: Deploying Custom Models on Vertex AI.

- Create a model endpoint with autoscaling enabled to handle variable workloads efficiently.

- Enable monitoring and logging to track usage, performance, and errors in real-time.

- Register your model in the Model Registry for version control, governance, and streamlined model management.

- Utilize the Vertex AI SDK or API to call the Deepseek R1 model

Use Cases for Vertex AI

- Rapid Prototyping: Great for teams testing new use cases or applications that need fast, scalable infrastructure.

- Enterprise Integration: Ideal for organizations that require built-in audit trails, monitoring, and security compliance for production-ready AI models.

- MLOps Integration: Vertex AI offers native support for automated workflows, including CI/CD pipelines, version tracking, and retraining pipelines.

GCP Advantage:

Vertex AI provides out-of-the-box model monitoring, automatic scaling, and integration with the Model Registry—features that make managing enterprise AI models faster and more reliable. Learn more about Vertex AI here: Vertex AI Overview.

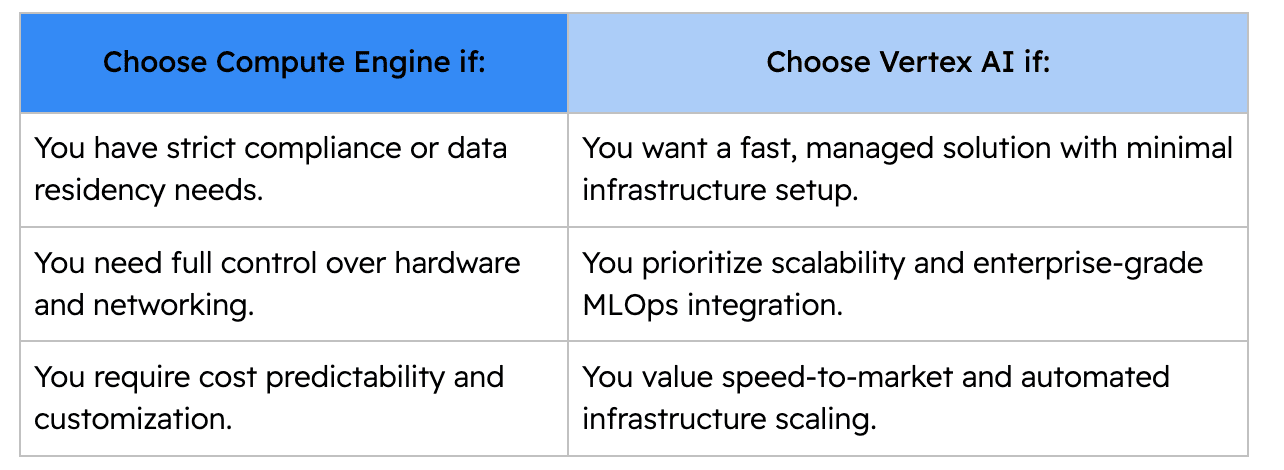

Compute Engine vs. Vertex AI: Which Path Should You Choose?

Choosing between Compute Engine and Vertex AI depends on your organization’s priorities and resources. Here’s a quick cheat sheet to help you decide:

By offering both deployment paths, GCP allows businesses to scale AI solutions flexibly, whether they need full-stack control or enterprise simplicity.

Looking Ahead

In this post, we’ve outlined two ways to deploy DeepSeek R1 on GCP—through Compute Engine or Vertex AI. Each option caters to different business needs, from full infrastructure control to fully managed simplicity.

Next in this series, we’ll get into optimizing model performance, covering everything from cost-saving tips to fine-tuning strategies for high-demand applications. So stay tuned!

Ready to deploy your LLM today? Schedule a consultation below to explore the best path for your business.

Adam Morgan

Cloud Engineer

Schedule a consultation

Embrace the power of secure cloud and AI solutions with Tridorian. Reach out to learn how we can make a difference.